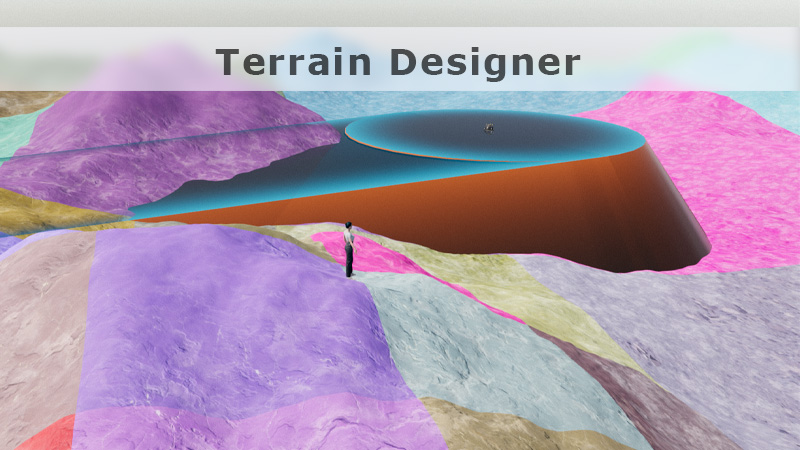

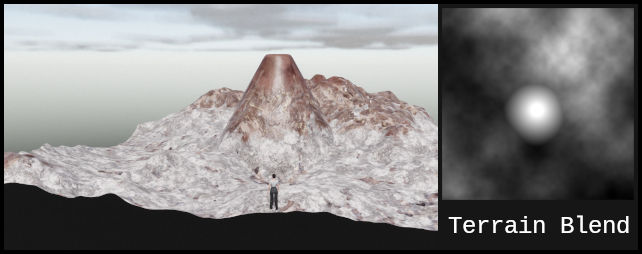

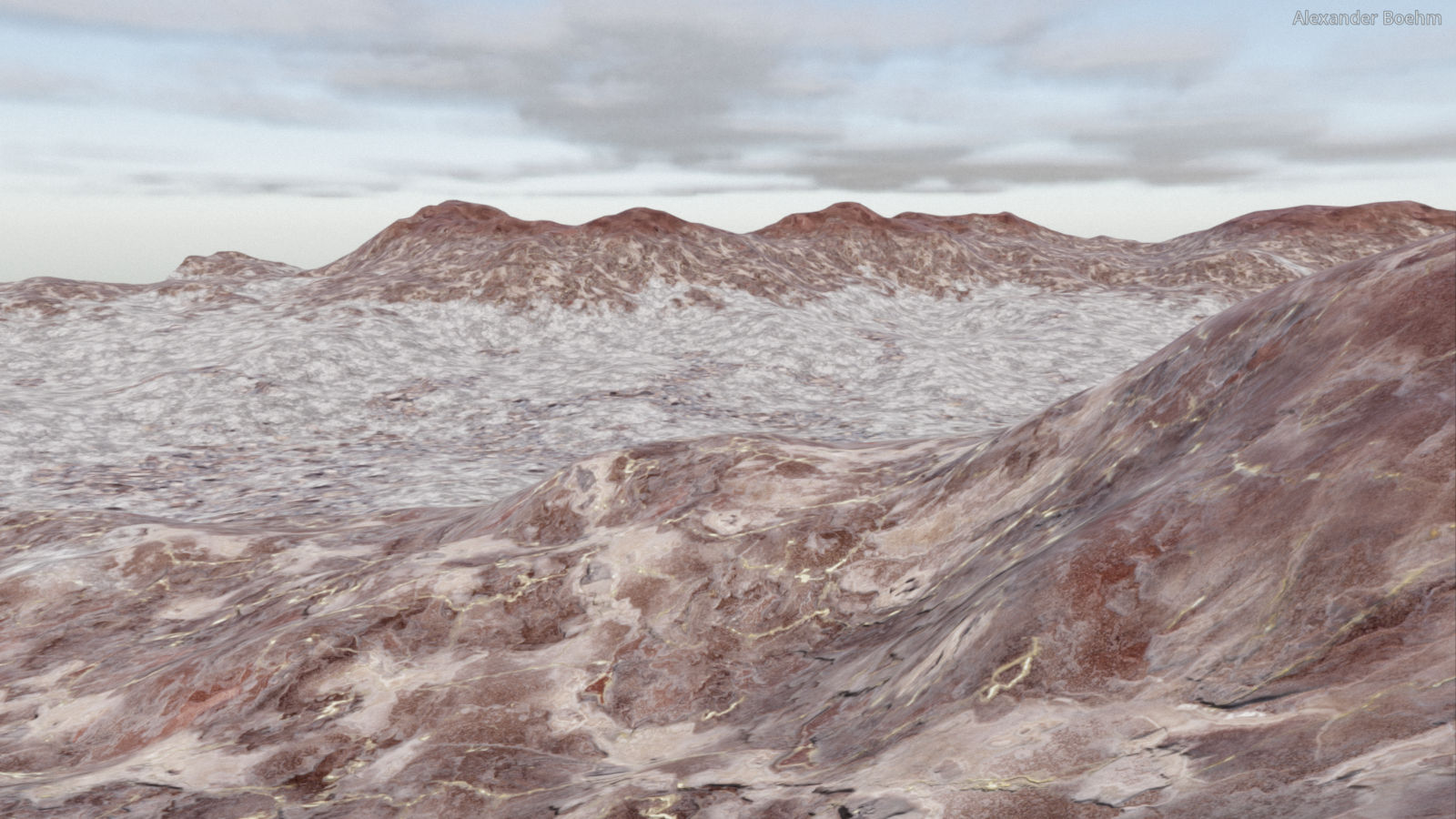

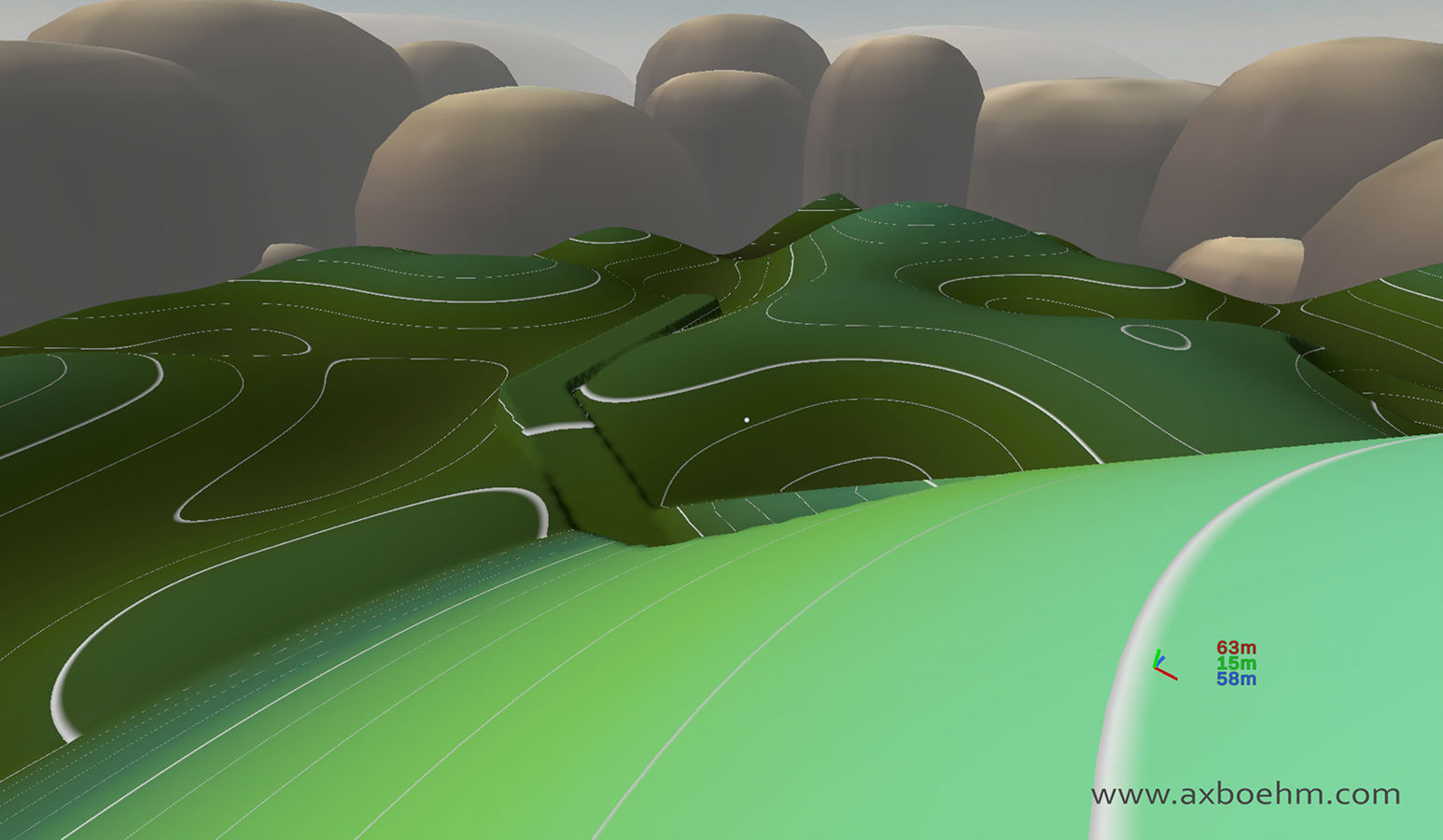

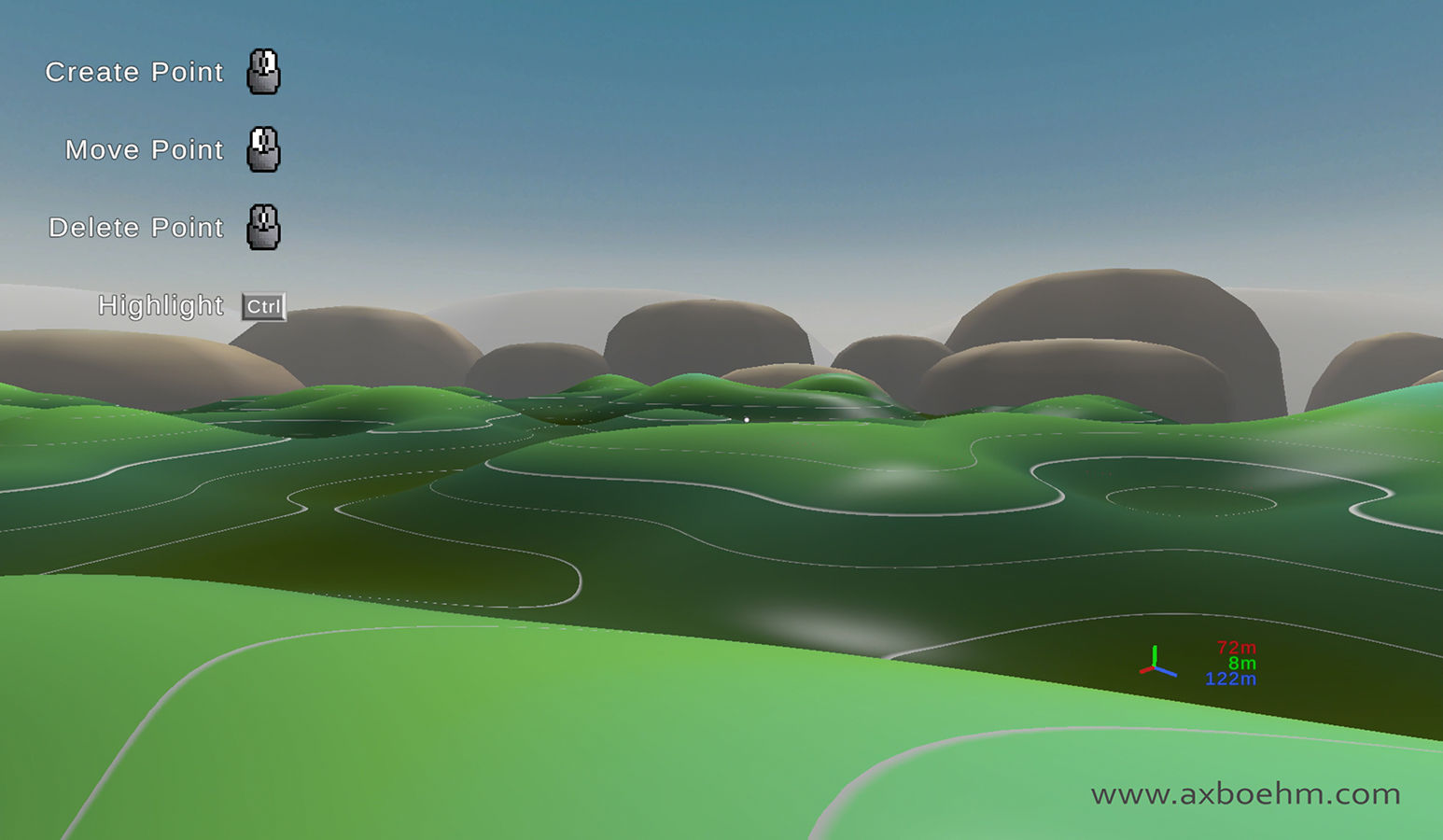

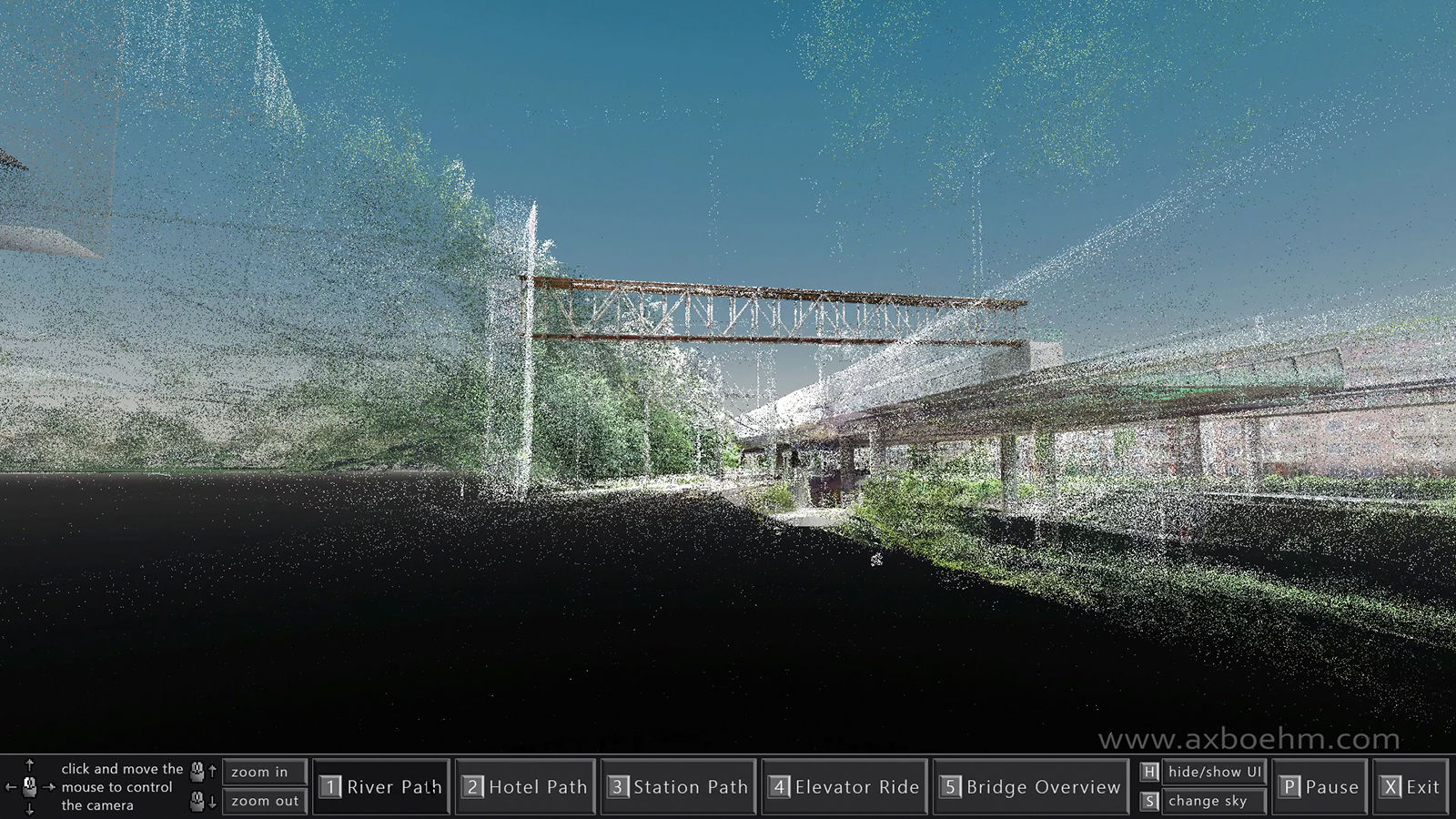

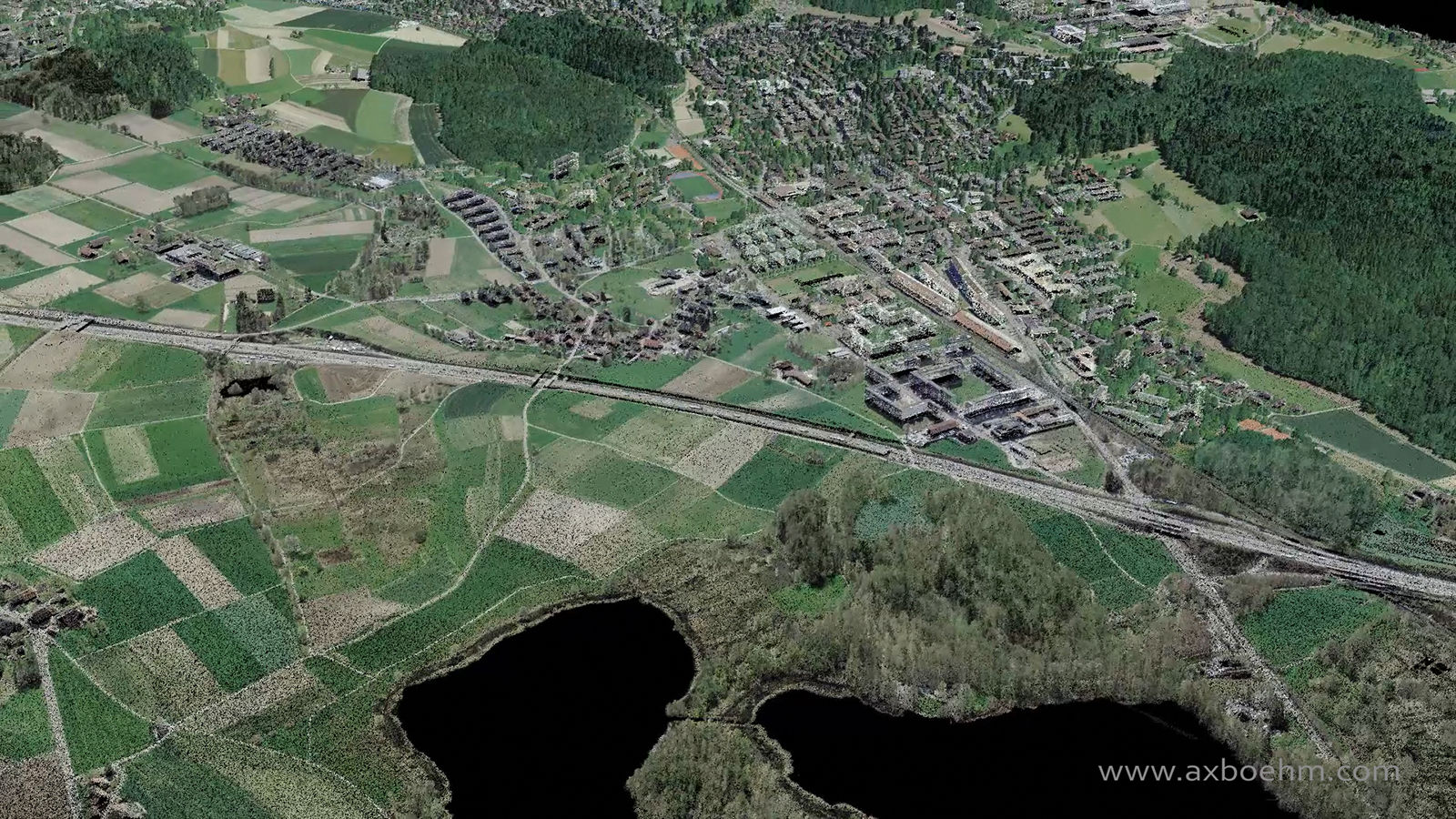

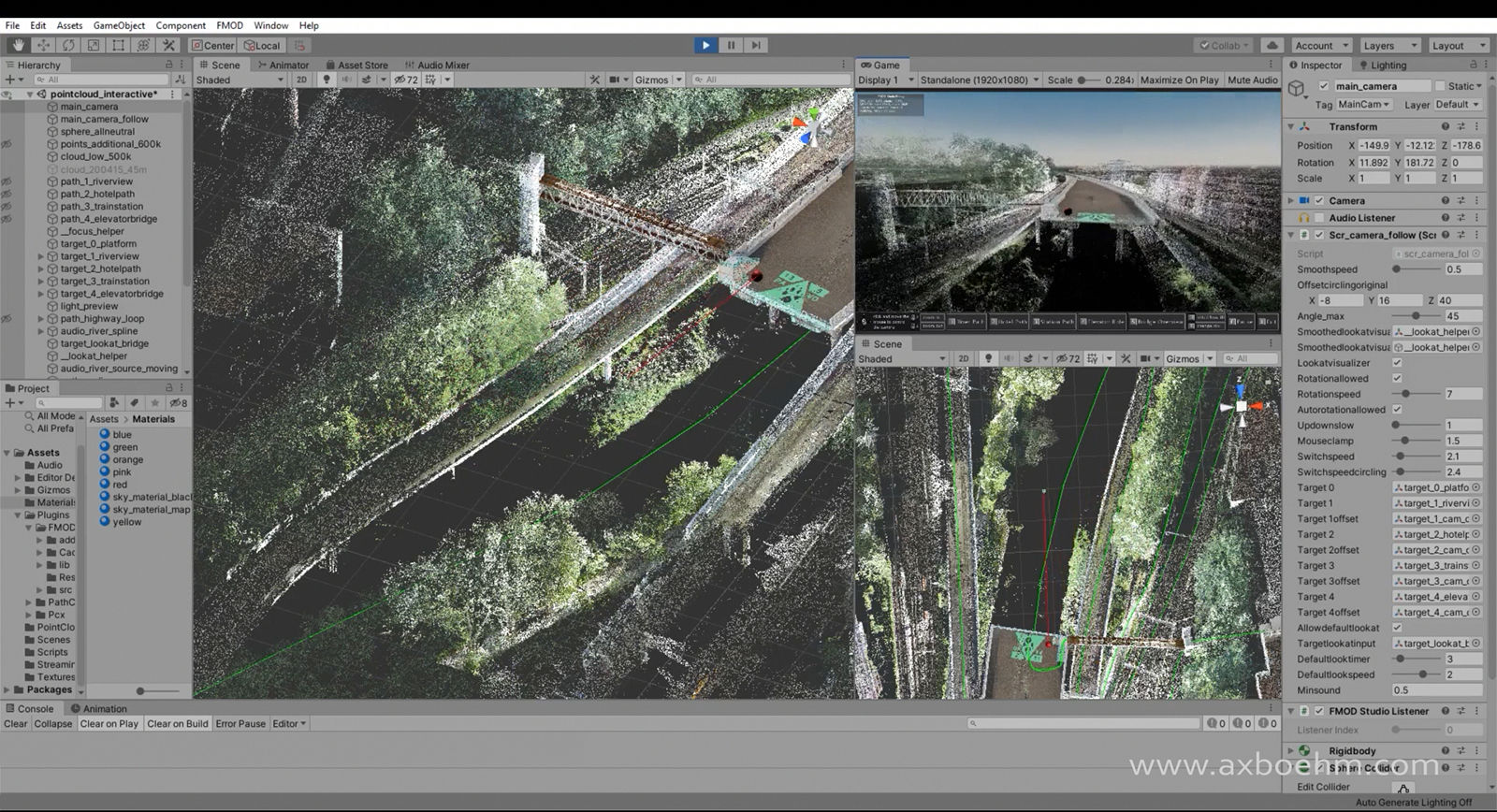

Terrain Designer is a 3D application that lets you walk around a landscape and modify it interactively and in real-time.

The application uses the Godot game engine v.4.2.1 for rendering and collisions and is written in C# and Godot shader code.

It is my latest interpretation of an interactive landscape design tool and an evolution of the other projects on this page.

I have made the code publicly available. Take a look if you are interested.

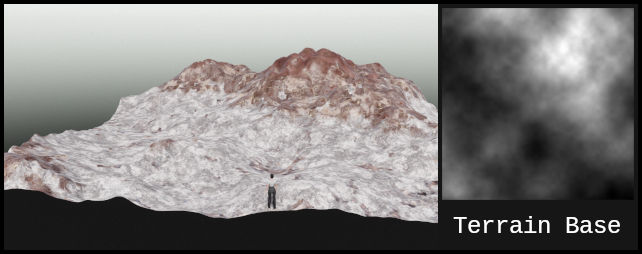

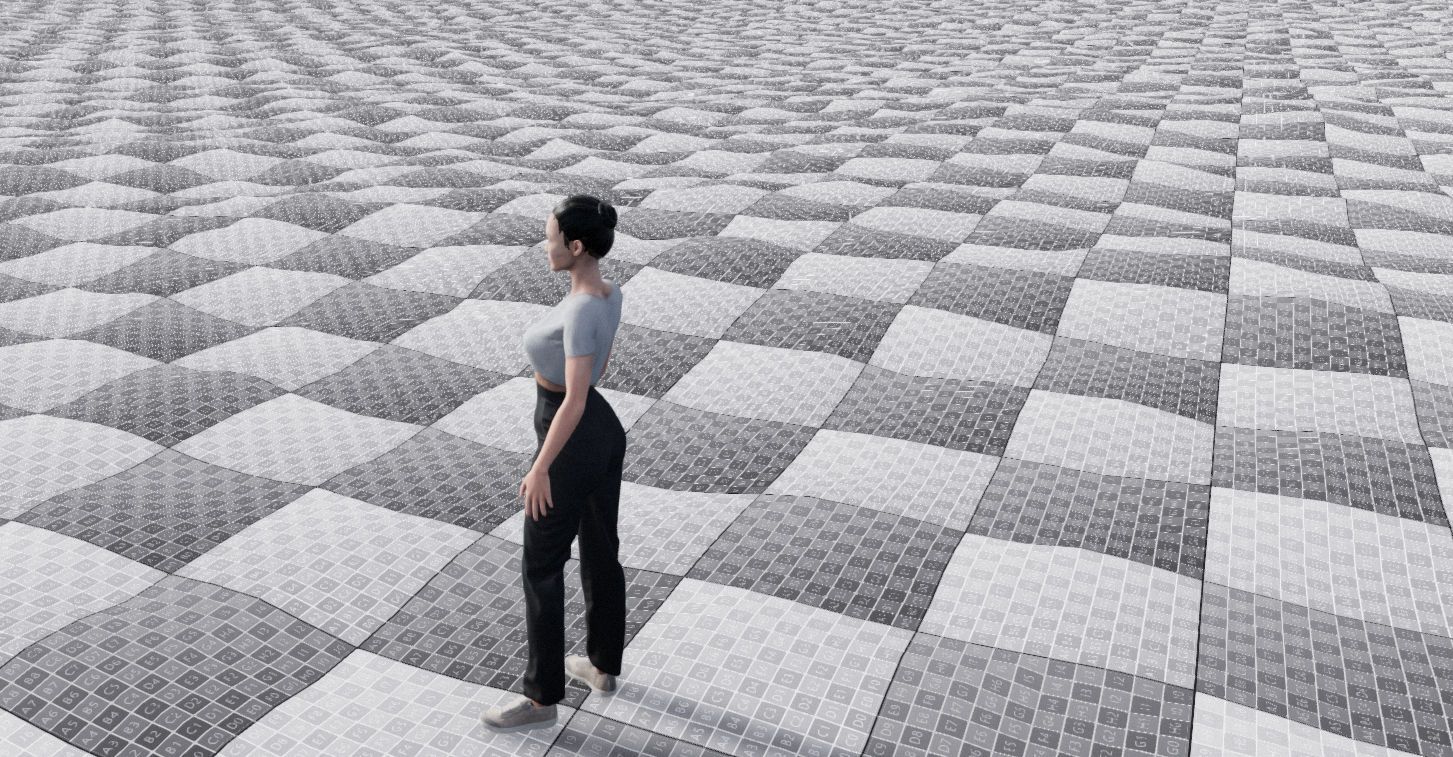

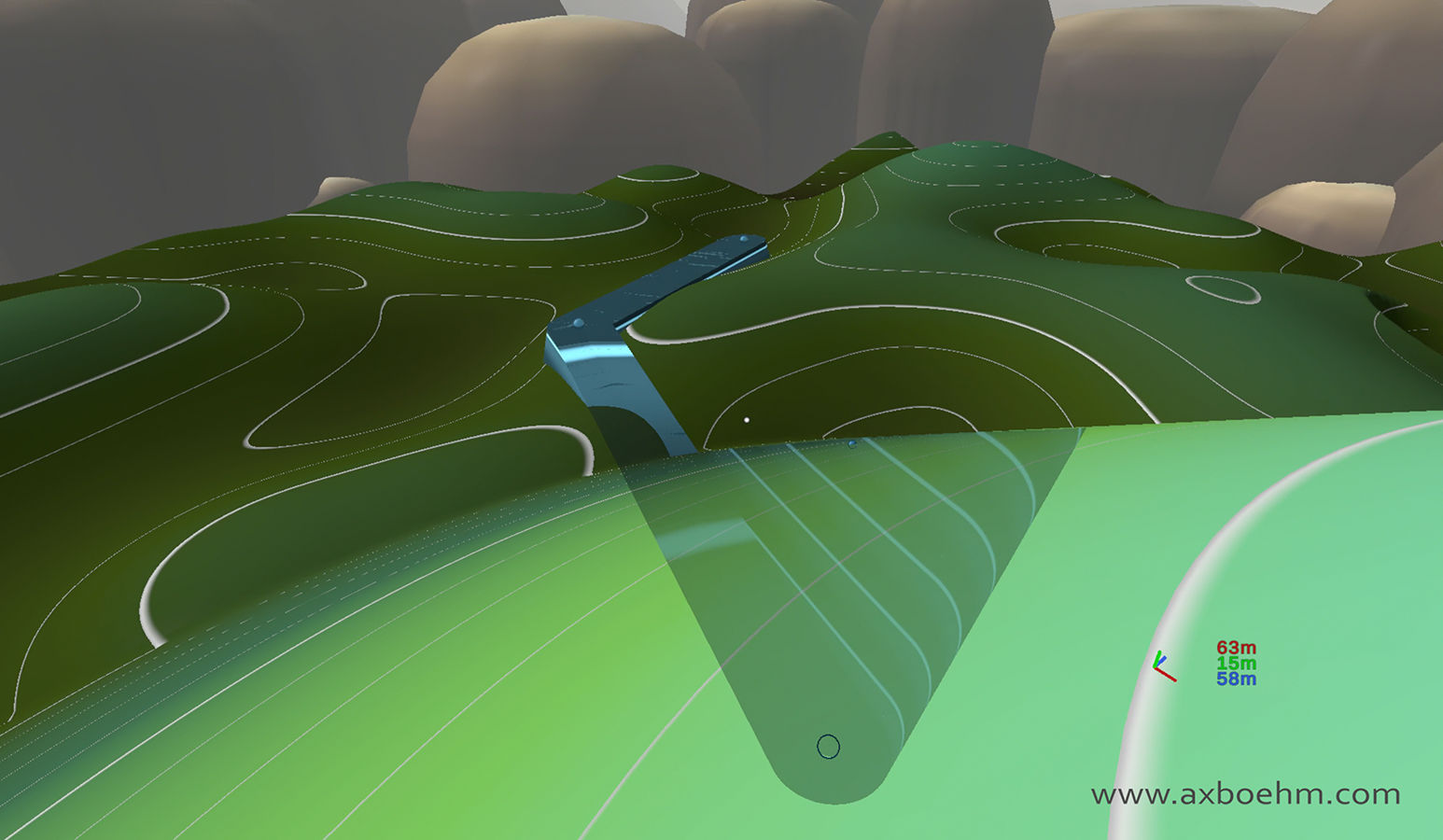

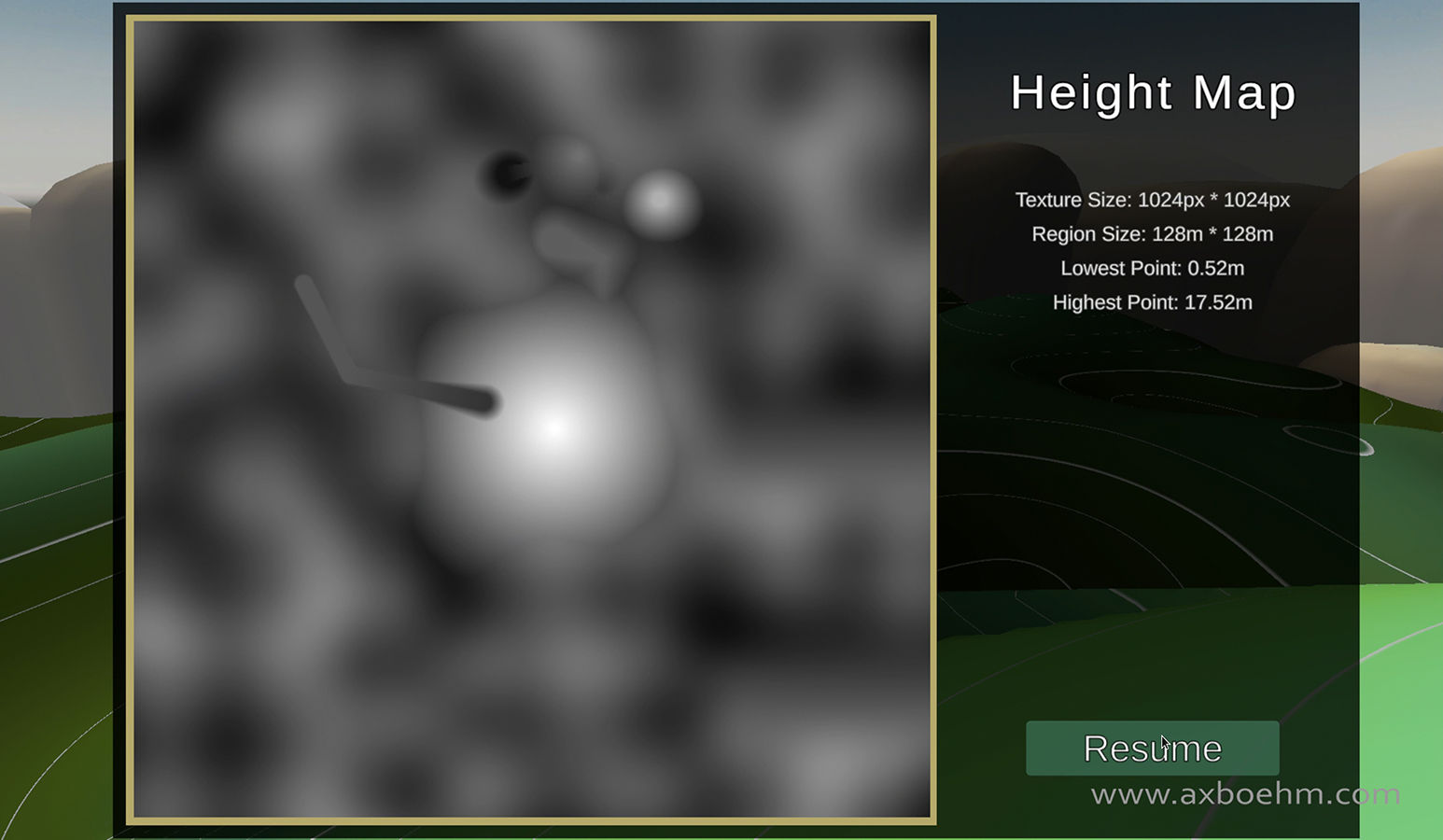

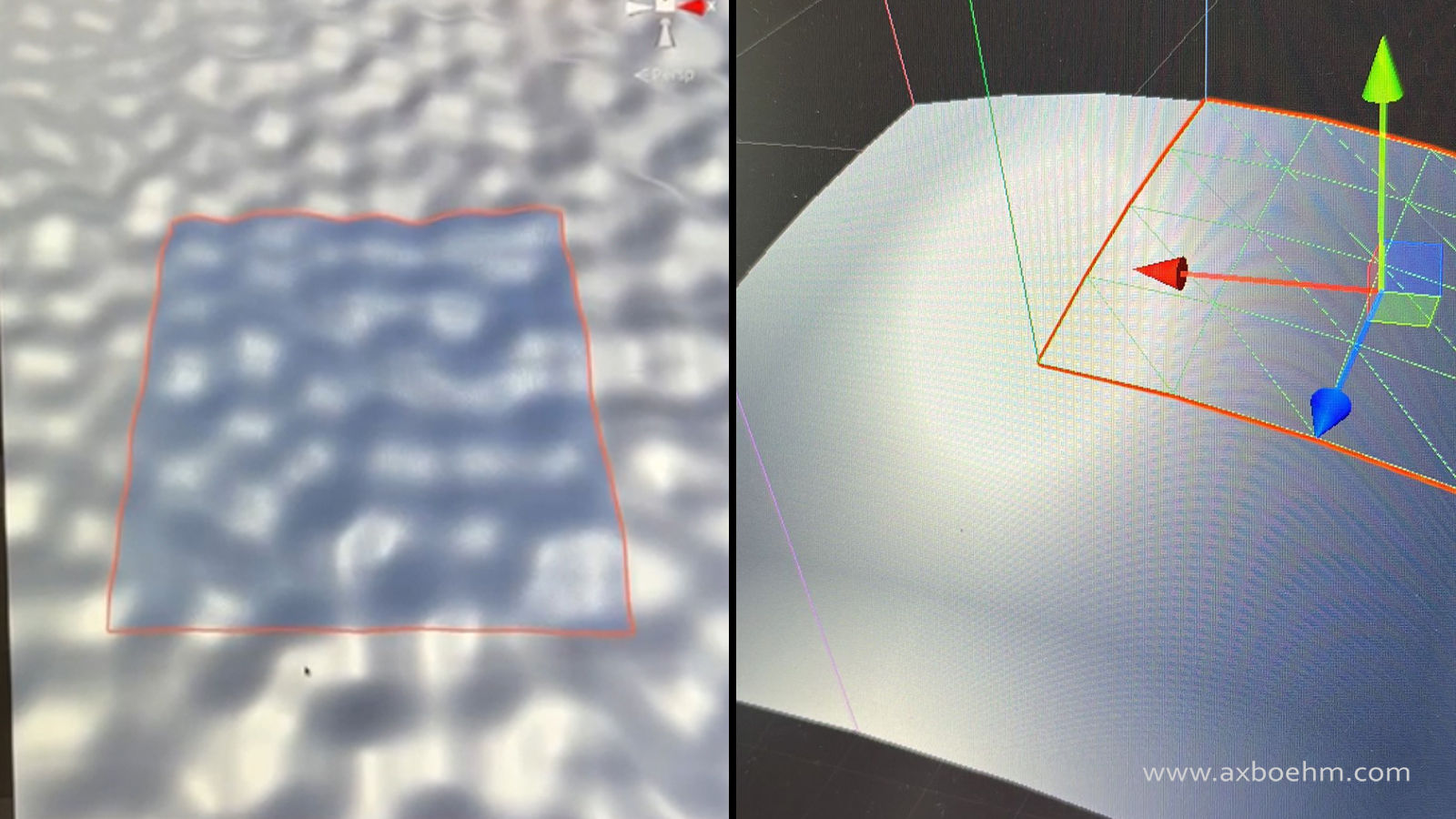

The terrain in the application is represented by a heightmap. Each coordinate of the terrain corresponds to a location on a 2D texture with the brightness at that point defining how high the terrain is at that coordinate.

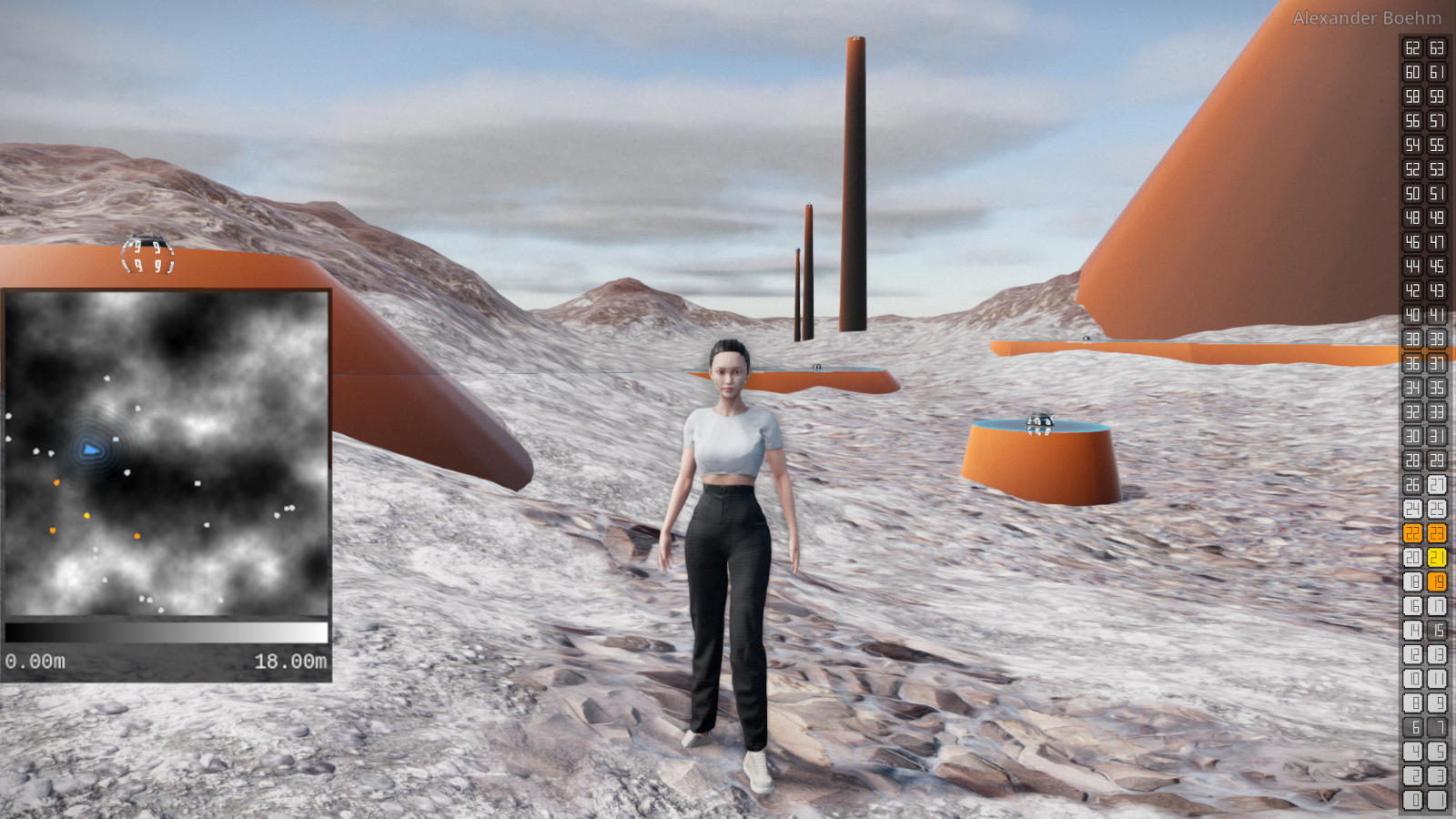

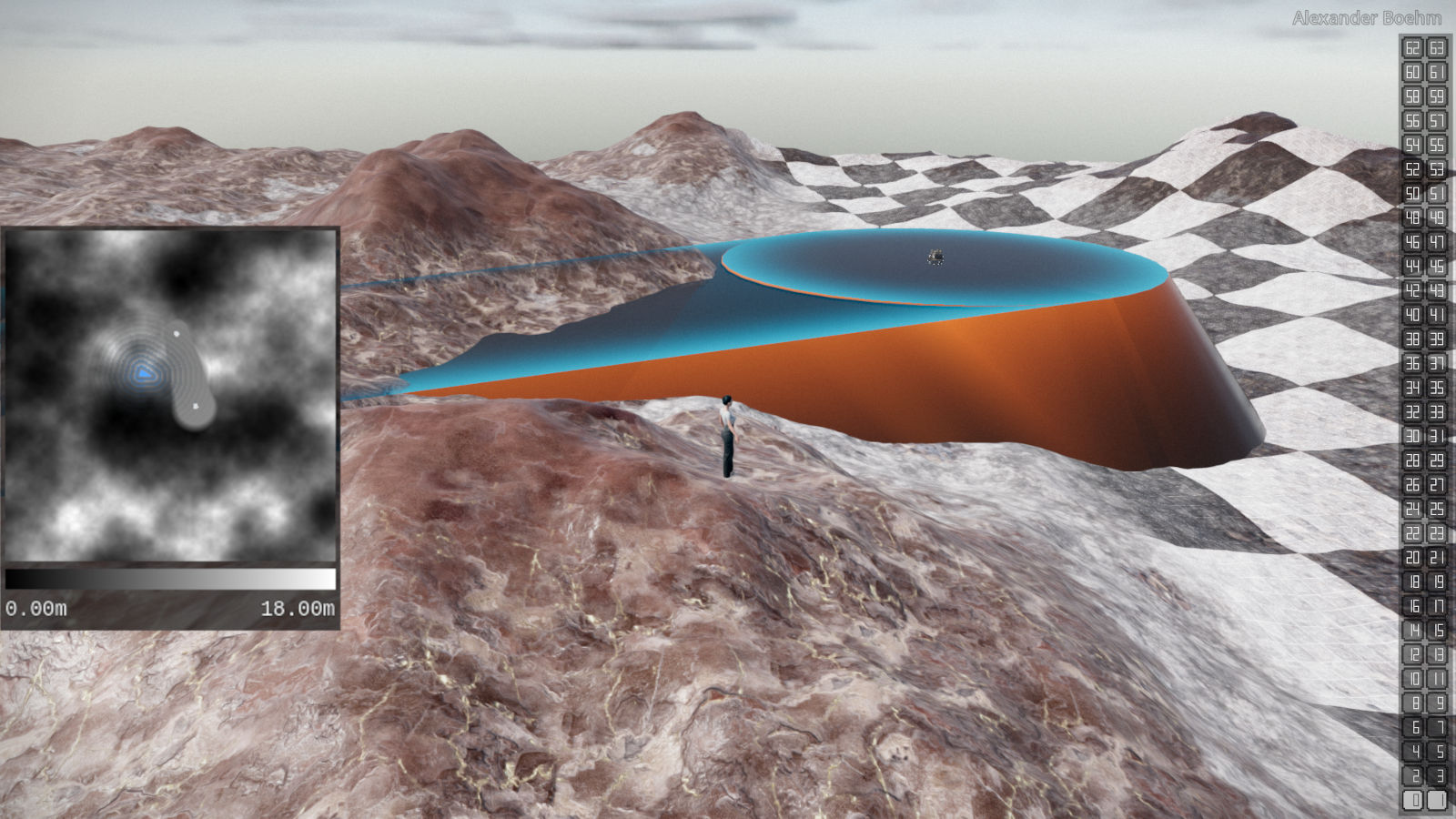

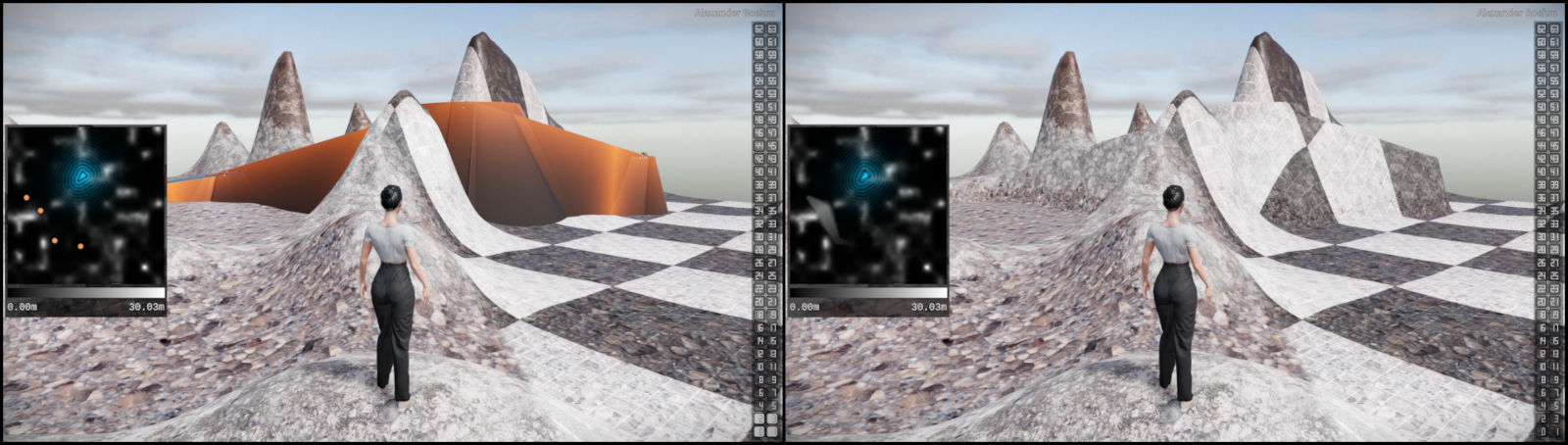

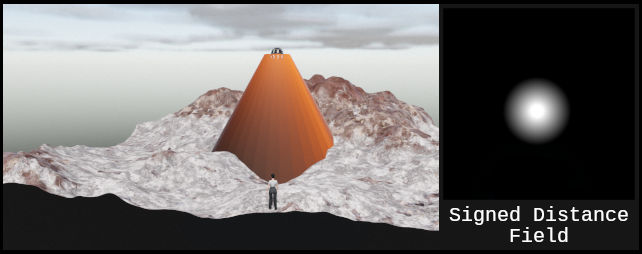

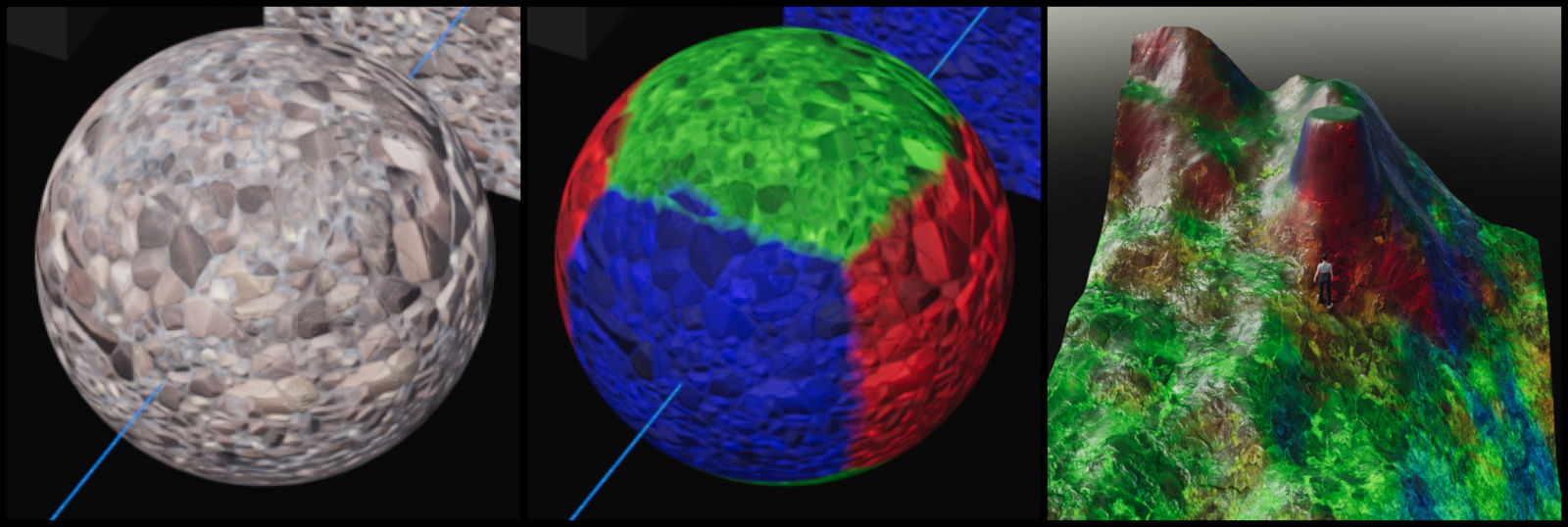

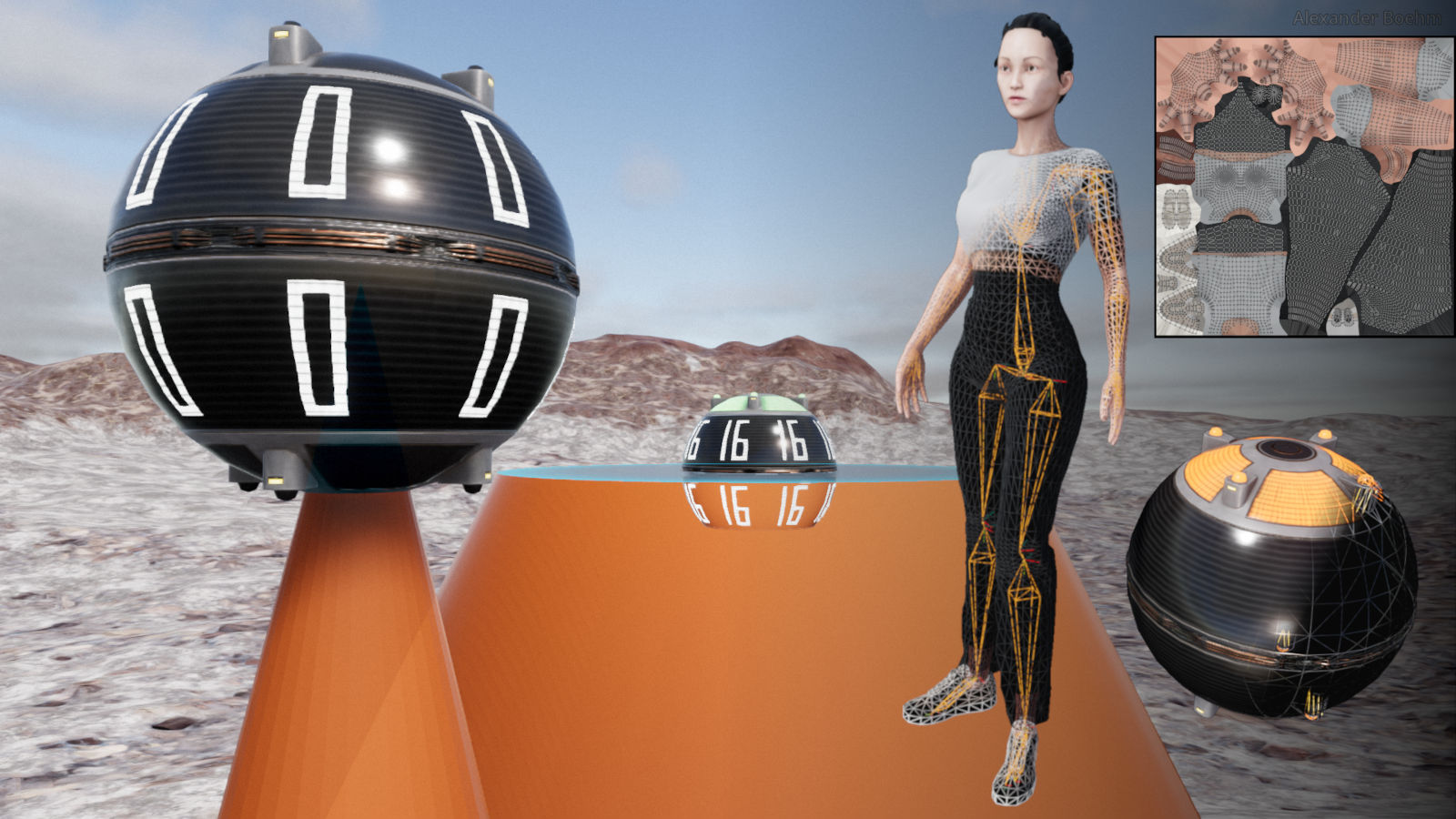

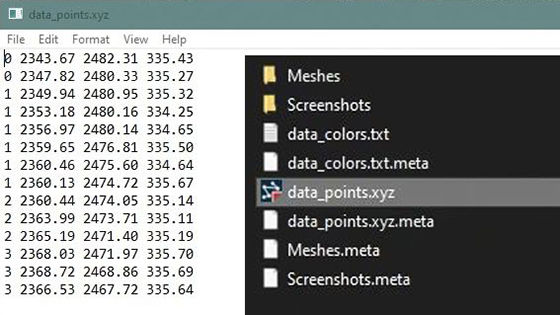

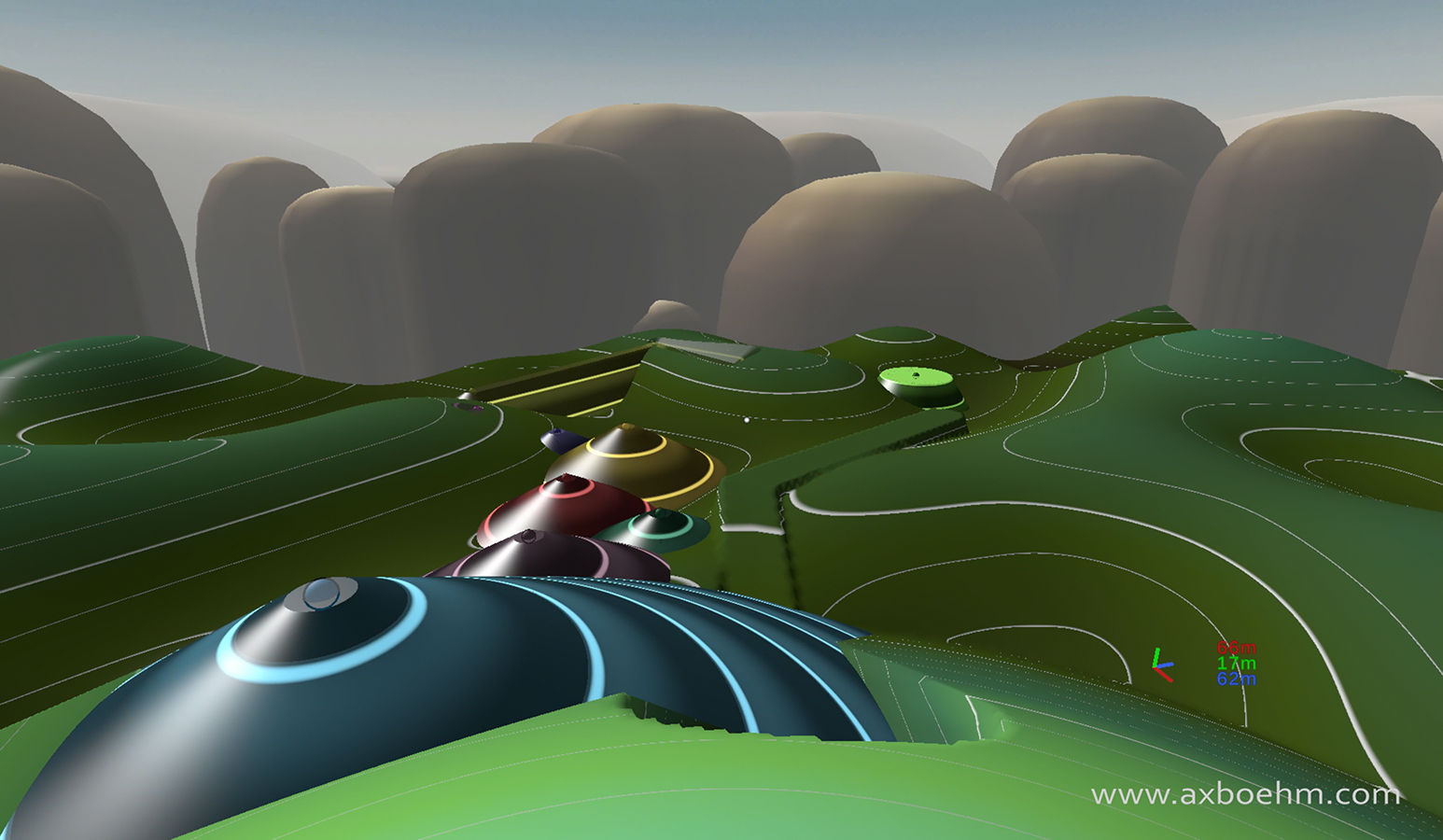

To allow the user to modify the terrain, intermediate geometry can be created and modified by the user in real-time which can then be permanently applied to the terrain.

Spheres serve as control points for this intermediate geometry. Each sphere defines the tip of a cone. The cone can have a truncated top to form a platform. Both radius of that platform and the angle of the cone can be adjusted.

Spheres can also be linked together to add additional connecting geometry.